Visitors to the Computer History Museum frequently want to know: what was the first computer? The first personal computer? In his recent post, “Programming the ENIAC: An Example of Why Computer History is Hard,” Computer History Museum Board Chair Len Shustek notes that one of the difficulties of computer history, and indeed, history of technology in general, is the question of “firsts”: what was the “first” X, or in alternate form, “who [first] invented X?” The problem is that for many of these “firsts,” there is no simple answer, because as Len pointed out, “What ‘first’ means depends on precise definitions of fuzzy concepts.” What seem to be questions with easy, factual answers quickly devolve into debates over semantics.

As we will see in this post, these issues have been the subject of much discussion among historians of computing and our curatorial staff. The question of “firsts” seems simple, but is actually quite complex. We’ll look at how these issues are dealt with by historians Thomas Haigh, Mark Priestley, and Crispin Rope in their recent book, ENIAC in Action: Making and Remaking the Modern Computer. The ENIAC has long been considered the “first” computer in the public mind, and anchors the Museum’s “Birth of the Computer” gallery in our main Revolution exhibit. Haigh and his collaborators, in fact, argue that ENIAC deserves credit for a new “first”: the first computer to run a program stored in memory. And yet, is the ENIAC significant simply because of these firsts, or because of its influence and impact on later computers?

Historical facts are not as self-evident as they seem. Claims to “firsts” are claims over the meanings of terms and the concepts they refer to. They are debates about language. For example, the question of the “first” computer really depends on what one means by “computer.” Prior to the 1950s, the term actually referred to a person who worked in a team to calculate the solutions to equations. Len notes that historian and former CHM curator Mike Williams “famously said that anything can be a first if you put enough adjectives before the noun.” For computers, these adjectives include “automatic,” “digital,” “binary,” “electronic,” “general-purpose,” “stored program,” as well as “useful,” “practical”, or “full-scale.” This is because during the 1930s and 1940s, many early computers and calculators (at the time, there was no clear separation between the two terms) could have some of these features but not others. Some computers were analog (really a completely different category of machine), some required some manual steps in their operation, many were mechanical or electro-mechanical (using telephone relay switches instead of electronic vacuum tubes), many stored and operated on decimal instead of binary numbers, many were special-purpose and could not be reconfigured, and some were small test machines or prototypes used to prove the engineering feasibility of the design and were never used for actual productive work. I will discuss “stored program” in more detail below. Regardless, the answer to the “first” computer question, when given a certain combination of these adjectives, can result in the answer being the Harvard Mark I, the Atanasoff-Berry Computer (ABC), the British Colossus, Konrad Zuse’s Z3, the ENIAC, or even Babbage’s Analytical Engine—which he never built. All of these machines are presented or mentioned in CHM’s “Birth of the Computer” gallery.

In that gallery, we have addressed this question directly in our video, “Who Invented the Computer?”

In addition to discussing all the various differences between the early claimants and the qualifiers for which each can claim to be “first,” the video discusses the different ways the verb “invent” can be interpreted. For instance, is it enough to imagine a machine? Or does one have to create a design? Does one have to actually build a machine based on such a design? And does the built machine actually have to work as designed, and be useful?

This results in multiple inventors: Charles Babbage conceived of, but never built, the Analytical Engine, a mechanical, programmable general-purpose machine; Alan Turing described and imagined a “universal machine” that could compute any problem presented in a set of instructions, given an infinitely long memory tape, which became the modern theoretical definition of a computer in computer science; Konrad Zuse built the Z3, the first fully programmable computer; the U.S. Army Aberdeen Proving Ground sponsored, and University of Pennsylvania researchers J. Prespert Eckert and John Mauchly built the ENIAC, the first electronic computer controlled by a “program”; Manchester University prototyped the Manchester Baby, the first “stored-program” computer built on the EDVAC design that came out of the ENIAC work at Penn. A court ruled in 1973 that Eckert and Mauchly’s 1964 ENIAC patent was invalid based in part on the fact that Mauchly had observed John Atanasoff and Clifford Berry’s demonstration of their electronic, digital, but not programmable or general purpose, computer: the ABC.

Marc Weber, founding curator of CHM’s Internet History Program, expanded on these ideas in a blog post last year, arguing that invention is rarely a singular event. It occurs in stages, each of which might be accomplished by a different person. First, the idea needs to be conceived. This was what Charles Babbage did with the Analytical Engine. Second, the technology needs to be “born,” in other words, a prototype needs to be actually built and tested, and in some rudimentary form, “work.” Third, it needs to mature, to be developed to work reliably, and/or deployed more widely, be employed by actual users for real work, not just exist as a prototype in a lab. Marc notes that for TCP/IP, this moment would be the deployment of the protocols over the entire ARPANET in 1983, while for the WorldWideWeb it would be the public release of the WWW code through the Internet, allowing third parties, including the authors of Mosaic, to write their own browsers with new features like embedded graphics.1

The ENIAC story shows how important Marc’s third stage of maturation can be. In their new book, historian Thomas Haigh and co-authors Mark Priestly and Crispin Rope look in detail at the ENIAC—its design, construction, use, conversion, maintenance, and decommissioning—and its symbolic role in later historical and legal debates. Though first operational in December of 1945, ENIAC’s first six months of life were spent in a limbo between strictly “experimental” or “actually working.”2Even after it began to be used regularly for real scientific calculations, it was extremely unreliable. The New York Times reported that only 5% of ENIAC’s 40-hour work week was spent on production work. Although at CHM we have explained to visitors that vacuum tube burnout was the source of this unreliability, Haigh et. al. discovered that “intermittents”—difficult to find failures including power supply voltage spikes, shorted cables, and bad solder joints—were more frequent causes of breakdown.3 Indeed, although it theoretically ran faster than the Army’s mechanical relay calculators, those could be set to run fully automatically and produce a result with no human intervention. Only after ENIAC’s conversion to a new “stored program” mode of programming and operation in 1948 (which I will discuss in depth below), was it able to spend more than half of its time doing productive work.4

In his blog Len Shustek notes that senior CHM curator Dag Spicer advocated avoiding what Dag called the “F-word” entirely. Sixteen years ago, Dag wrote an editorial in which he called for an end to the question, “what or who was first?” in the history of technology.5 Dag also recommended banning the use of other superlatives such as “biggest” and “fastest.” The reasons Dag outlined for the ban are well known to historians of science and technology. Most “discoveries” or “inventions” are not objectively singular but multiple, with many scientists or engineers pursuing the same problem in parallel. Particular inventions such as the light bulb take place within a larger socio-technical system and are often an incremental step, rather than a giant leap, in a succession of advances. Claims to “firsts” rely on “the concealment of context,” Dag wrote. At best, they are an oversimplification, a “twelve-year-old’s” version of history, serving to prop up the heroic, singular “Great Man” myth of history that is the popular image of science and technology. Dag left unsaid the corollary: at worst, they could be outright fabrications made by parties with an agenda. Marc Weber, in his own blog post, points to the telegraph, telephone, and radio as instances of multiple invention that have since been subsumed by the myth of the singular genius inventor. “From the steam engine to the Web, most everything has been independently conceived at least three times,” he says.6

Nevertheless, priority is a high stakes game. Being “first” is for most academic scientists their primary form of professional currency. Priority results in publicity and increased credibility in the form of publications, which translate into tenure, grant funding, awards and accolades. For inventors and technology entrepreneurs, “firsts” have direct financial consequences. Priority can determine the validity of patents, upon which can hinge the potential for a billion dollar company or industry. Patents artificially focus the history of technological development into narrowly selected criteria for the purposes of the law, not historical clarity. Yet, the realities patents create by their existence (or non-existence) are extremely consequential for technological industries. For instance, one of the most well-documented court cases in the history of computing was Honeywell, Inc. v. Sperry Rand Corp., in which Eckert and Mauchly’s patent for the ENIAC—that Sperry claimed gave them a patent over all digital electronic automatic computers—was invalidated. Had this not occurred, all computer makers after 1973 would have been forced to pay licensing fees to Sperry, possibly aborting the nascent PC industry. Finally, one’s place in history can be the ultimate stake. Again, the ENIAC and its successor, the EDVAC, provide a canonical example: who deserves credit for coming up with the “stored program” architecture, John von Neumann or Eckert and Mauchly? Von Neumann failed to share credit with Eckert and Mauchly in his First Draft of a Report on the EDVAC, which became widely disseminated, and what we now call the “stored-program” architecture became associated with von Neumann’s name. Historians have now acknowledged Eckert and Mauchly’s role, but this took years, and the term “Von Neumann architecture” is still widely used.

Despite these problems, historians of computing cannot avoid “firsts” entirely, especially when dealing with an important historical milestone such as the ENIAC. Len highlights how, in their new book, Haigh et. al. have refocused attention on the post-1948 ENIAC, when it was converted to what they call the “modern code paradigm,” a key component of what other historians and computer pioneers have labeled “the stored program concept.” In its original form, ENIAC was not “programmed” but was “set up” by manually re-wiring cables between its different components. Haigh et. al. say that configuring ENIAC for a problem was like constructing a special-purpose computer anew each time out of a general-purpose kit of parts.

ENIAC’s function tables, which were banks of switches that could be manually dialed to represent digits from 0 through 9, were a form of read-only memory (ROM) used initially to store constant data. Values could be read from them into ENIAC’s vacuum tube-based accumulators at full electronic speeds. After its 1948 conversion, programs’ instructions, coded as decimal numbers, were stored in the function tables instead. One of these function tables is displayed in the Computer History Museum’s “Birth of the Computer” gallery.

After the conversion to an EDVAC-inspired design, however, the wires between the components were fixed in place, and a true “program” was loaded onto the “function tables,” what amounted to an addressable read-only memory (ROM) configured by setting manual switches that represented decimal numbers. Another way of looking at this conversion is that the original modular ENIAC was now permanently configured not as a special-purpose machine but as an EDVAC-style general purpose computer.7 In this new mode, in April 1948 ENIAC ran its first “program,” a Monte Carlo simulation of nuclear fission. Thus, despite pointing out numerous places the problems with “firsts,” most notably in their introduction,8 Haigh et. al. have established for computer historians a new “first,” something which Len in his own post finds particularly notable. What had previously been celebrated as the first run of a “program” on a “stored program” computer, a demo program that calculated the highest factor of 218 that ran on the prototype “Manchester Baby,” has now been replaced by the ENIAC Monte Carlo simulations. Haigh et. al. argue that it does not matter that ENIAC’s programs were stored in ROM because many modern computers store much of their programming in ROM, and ENIAC ran this program according to the “modern code paradigm,” which I will explain below.

Klara and John von Neumann

Haigh et. al. have a complex relationship to “firsts.” They argue in their book that it is more important to look at practice and impact rather than focus on “firsts,” and most of the book is committed to exploring these aspects of ENIAC rather than its “first” status. Nevertheless, they consider the Monte Carlo simulations to be a “first” worth documenting, especially as it revises the place of the Manchester Baby as the previous record-holder. In personal correspondence with me, Haigh pointed out that he always asks himself “Would I care about this even if it were second?” In the case of trivial test programs like tables of squares, for which the answers are already known, his answer is “No.” In the case of the Monte Carlo simulations, it is “Yes”: they are historically significant in their own right, because they produced new results for Los Alamos, because they reveal that such calculations were, in fact, data and I/O intensive, because they highlight the central role of von Neumann’s wife Klara in programming the machine, and because many features of modern programming practice, including subroutines,9 were pioneered here.10 The fact that the Monte Carlo calculations are a “first” is just gravy. Haigh et. al. can have their cake and eat it too.

In pursuit of this argument, Haigh et. al. are very careful about definitions. They avoid using the term “stored program” in favor of what they call, the “modern code paradigm.” In both the primary literature and the existing historiography of computing, the term “stored program” in conjunction with “concept,” “computer,” or “architecture” has been used as an alternative to “EDVAC-type” or “von Neumann architecture.” As we discussed above, the latter fell out of favor among historians because it masked the contributions of Eckert and Mauchly. These terms are usually taken to be synonymous, referring to a computer design in which control of the machine occurs through program instructions that are represented as numerical codes. Encoded in this manner, a program’s instructions are indistinguishable from the data making up the information a program manipulates. Both are stored in the same rewriteable memory. This means that a program can itself be data. This allows programs to modify other programs or even themselves.

Haigh et. al. explore the history of the term “stored program” but also note its deficiencies: it is a loose term that variously refers to three separate concepts. Haigh et. al. have defined these three sub-concepts in detail. Each of these are “paradigms” in historian of science Thomas Kuhn’s sense of “an exemplary technical accomplishment based on a new approach,”11 canonical technical examples which became templates for later elaboration and development.

These three paradigms are:

Separating out these three clusters of concepts commonly referred to by the term “stored program concept” allow Haigh et. al. to make a very precise historical claim: the post-conversion ENIAC that ran the Monte Carlo simulations was firmly under the “modern code paradigm,” despite not meeting the criteria for inclusion under the “EDVAC hardware paradigm” as it did not have a large rewriteable memory. Spurred by a conversation with historian Allan Olley, Haigh point out to me that, unlike “stored program concept,” which tends to focus on the hardware architecture or the kind of memory used, “modern code paradigm” focuses attention on the form and capabilities of the code that controls the computer, or the kind of program that can be run on a computer, rather than how to categorize the computer itself.

The precision of these concepts allows Haigh et. al. to make their “first” claim: the Monte Carlo calculations are the “first” computer program to be run on a computer implementing the “modern code paradigm,” not the “first” computer program run on a “stored program” computer or von Neumann architecture computer. Having acknowledged that the problem with “firsts” is often imprecision in the meaning of the terms, they have been careful to precisely define what they mean in order to make their claim. The fuzziness of the “stored program” concept thus cannot be used to broaden the reach of their limited claim.

Another key point is that the terms “EDVAC hardware paradigm,” “Von Neumann architectural paradigm,” and “modern code paradigm” are what historians call “analysts’ categories,” or concepts that historians, in this case Haigh et. al., use to describe the past. They are not terms used by people at the time, what historians call “actors’ categories.” One of the problems of the term “stored program,” is that it was not used in the 1940s, does not appear at all in Von Neumann’s “First Draft of a Report on the EDVAC” in which he described the new computer, or in the Moore School lectures where the EDVAC design was disseminated among other computer researchers. “Stored program” emerged only in 1949 within IBM and spread in the 1950s through its use by IBM engineers. At this point, we can say that in the 1950s “stored program” was an “actor’s category.”

In the 1970s, with pioneers such as Herman Goldstine contributing to the history of computing literature, the term “stored program” entered the vocabulary of historians, and subsequently became an “analysts’ category” used to separate all modern computers from their ancestors.14 When historians use a term, they are careful to separate “analysts’ categories” from “actors categories,” for to claim that a historian’s own categorical terms are the same as those the historical actors used themselves at an earlier time is confusing and anachronistic. One cannot simply apply the terms in common use today retroactively; new words and meanings emerge over time, and even words that previously existed shift and gain new meanings.

Careful attention to this distinction is a mark of good history. Haigh et. al. do not at any point claim that the Monte Carlo simulations mark the beginning of “software” even if they are claiming they are the first programs to run in the “modern code paradigm.” Although in contemporary usage “computer programs” and “software” are interchangeable, in the 1960s, “Not all ‘software’ was programs, and not all ‘programs’ were software.”15 In “Software in the 1960s as Concept, Service, and Product,” Haigh traces the history of the term “software” itself: “software” was never used in the 1940s, and in the 1960s it often encompassed operating system software but not application programs that users wrote themselves. Often the dividing line between a “program” and “software” was whether or not it had been written in-house or been obtained from an external organization.16

In “Software and Souls; Programs and Packages,” Haigh, taking up a suggestion from historian Gerard Alberts, argues that the key component of the word “software” is the “ware.” A program that one writes and never shares with anyone else is not “ware.” “Ware” does not necessarily imply a commercial transaction. Open source software still counts as software, and indeed much early software was exchanged freely among members of the IBM user group SHARE. But it does require packaging for distribution to others. Haigh writes that “Programs became software when they were packaged, and not everything in the package was code.”17 Nevertheless, as an actor’s category the term “package” for carefully prepared sets of computer programs being shared predated the use of the term “software.” Taking “software” as an analytical category, using Haigh’s definition of it as “programs packaged for distribution to others outside of one’s organization,” it might be possible to locate the first “software” in packages being exchanged by SHARE members, despite the fact that they themselves did not use the term. It can be confusing, however, when the same word can refer to either an analytical category or an actor’s category; an historian must be careful to indicate in what way she is using a given term.

What can happen when people do not take such care in avoiding these pitfalls? Many claimants to firsts reinterpret their earlier accomplishments in light of more recent developments and retroactively claim to have invented something of immense influence on the recent accomplishment. Rarely can we say that they understood the eventual significance of what they were doing at the time, being motivated by solving problems relevant to that particular earlier time and place. For example, after personal computers became widespread in the 1980s, many prominent computer scientists, including such luminaries as Alan Kay—who was involved in inventing personal computing at Xerox PARC—began to reinterpret older computer systems, such as the biomedical minicomputer LINC, as “personal computers” in light of their new understanding.18

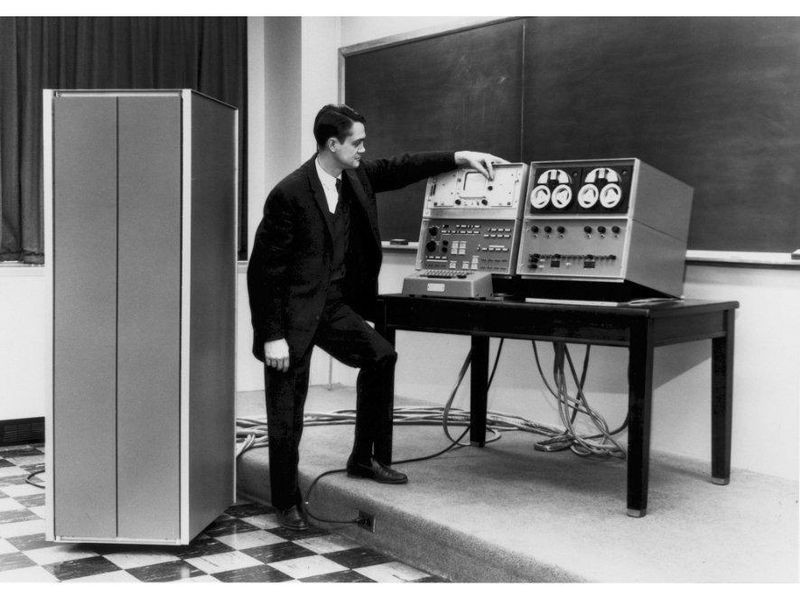

Wes Clark demonstrating the LINC at Lincoln Labs. Image © Massachusetts Institute of Technology (MIT). Lincoln Laboratory. Catalog #102630773

Writing history backwards in this way, taking the categories and concerns of the present and simply applying them to the past without regard to context, is something historians learn is a cardinal sin. While LINC and its antecedent, TX-0, were single-user, interactive machines, and thus in this sense, “personal,” they were also very large and expensive compared to the desktop machines commonly understood as “personal computers” of the 1980s, and were generally only available to institutions.

By highlighting LINC’s design as an interactive machine for an individual user, Kay correctly notes the influences on his own work in developing the notion of the Dynabook, leading to the Alto computer at Xerox PARC, a perennial favorite for the title of “first personal computer.”19 Kay’s humility, deference to and acknowledgement of debt to his intellectual forebears is admirable, as is his highlighting of important historical technologies that have now fallen out of the popular consciousness; all inventors stand on the shoulders of others.

Nonetheless, retroactively applying the term “personal computer” with all its modern connotations (small size, low cost, ease of use, and availability for purchase by consumers) to LINC opens up a can of worms. If LINC, why not its ancestor TX-0, which could be controlled by a single user interactively? If TX-0, why not its ancestor Whirlwind, which also occasionally was used by an individual? Why not timesharing mainframes like the PDP-10, which tried to maintain the illusion of a single-user experience, itself inspired by the experience of using TX-0? Why not any computer, of any size, any cost, any level of accessibility (or not) to ordinary people, which at any point in time was available for exclusive use by a single person? Haigh et. al. even note that in 1946, ENIAC “remained a quite personal machine, one that could effectively be borrowed and operated by an individual user.”20 Is ENIAC then the first “PC?” The term “personal computer” loses precision and becomes meaningless.

Certainly, for some aspects of the definition of “personal computer” popularized in the 1980s, LINC certainly fits—it was indeed small for its time and designed for use by an individual scientist—but not for others—it would certainly not be affordable by an average individual. The same applies for other pre-1970s computers. LINC is special and unique, however, due to its rejection of both timesharing and batch processing, and its tailoring to biomedical laboratories, which led to design choices that did indeed prefigure aspects of the personal computer, influencing computer designers at DEC and later Xerox PARC. I believe that LINC’s proper place is in the “prehistory” of the personal computer, but should not be classified as one itself. Locating the birth of personal computing in the 1970s, however, does not mean ignoring important antecedents, of which LINC was one. Similar pitfalls apply if one thinks of timesharing as simply an earlier version of today’s “Cloud.” Both implement a computer utility business model, and while there are roundabout connections between them, the two are based on very different technologies created in different technological and economic contexts. It is a disservice to those who invented and developed timesharing in the 1960s to paint it as simply an evolutionary step to today’s cloud. In both of these cases, it is important to highlight how earlier developments influenced the later inventions, but applying a later term to an earlier technology erases the historical context of the earlier technology.

History is, of course, necessarily written in the present, and shaped by current concerns. It involves a process of selecting significant events out of the noise of everyday occurrences in light of their later impact.21 Only with time and distance can we identify some events as historically important. The same is true of technologies. At the museum, we have an informal “ten-year rule,” that we try not to collect any artifact until ten years after it first became available, in order to properly assess its historical importance. Impact on contemporary users, and influence on later technological developments is thus a legitimate criterion for deciding what to collect. However, we must also situate older technologies within their own time and place, rather than simply slot them into a straight line of progress towards the present.

This has an effect on the stories we highlight as “firsts.” In the Computer History Museum’s Revolutions exhibit, the most important of the initial galleries is devoted to the “Birth of the Computer.” Although this gallery contains side sections displaying artifacts from and containing interpretive text about other early computers, such as the Zuse Z1, Bell Labs Relay Calculator, Harvard Mark I, III, IV, the Atanasoff-Berry Computer, the Colossus, the Manchester Baby, Johnniac, SWAC, and others, pride of place belongs to ENIAC. Though a full exploration of the gallery, including a viewing of the aforementiod will result in a nuanced view that there were many early computers which all in some fashion share the title of “first,” a quick visitor would not be mistaken if he or she came away thinking that the ENIAC was the most important. Part of the reason ENIAC is so significant, warranting not just its place as the exemplary artifact in the “Birth of the Computer” gallery but also its recent monographic treatment by Haigh, Priestley, and Rope, is its impact and influence. Even at the time, ENIAC was profiled by the New York Times, and unlike some other early experimental machines, did productive work for almost a decade. Unlike Colossus, its existence was not classified. More significant, however, is the fact that the experience Eckert and Mauchly gained on ENIAC helped lead to the creation of the “stored program” architecture, which became the blueprint for all subsequent computers thanks to the wide dissemination of the EDVAC First Draft. And Haigh et. al. show that it was ENIAC itself, after its 1948 conversion, that became the first operational computer running in the modern code paradigm. Regardless if its status as “first,” because of ENIAC’s high profile and exemplary status, the programming of the Monte Carlo simulations generated practices, such as the use of subroutines, that would affect the way subsequent programs for “stored program” computers were written. Later historical analysis has confirmed ENIAC’s significance through its impact.

But does that mean that technologies which were not influential or impactful do not matter historically? Certainly not. We aren’t only concerned with highlighting the winners. Marc Weber notes in his blog post that the anniversaries of winning technologies also offer opportunities to honor losing ones: alternative networking protocols or standards—OSI, Cyclades, PUP, SNA—all were replaced by TCP/IP. Each were important in their own time, and given different circumstances, might have beaten TCP/IP. Similarly, early computers that were previously forgotten or unknown, like Colossus and Konrad Zuse’s Z series, are worthy of their place in history. More prominent examples of historical “losers,” might be Charles Babbage’s Difference and Analytical Engines, which have had prominent displays in the Computer History Museum. Babbage’s work did not significantly influence the generation of computing pioneers of the 1940s. Though Howard Aiken later discovered Babbage’s work, it had little influence on his design of the Harvard Mark I, which did not have a conditional branch capability.22 Eckert, Mauchly, and Von Neumann’s work on the modern code, EDVAC hardware, and Von Neumann architectural paradigms was completely independent of Babbage’s ideas. Regardless, we still consider the work of Babbage and of Ada Lovelace to be historically significant. Why?

It could be because Babbage’s Analytical Engine was the first conceived computational machine to be fully general purpose and programmable, a century before Alan Turing’s imagined universal machine was described and any actual general-purpose computers were built. Lovelace is sometimes described as the “first” computer programmer (as are ENIAC’s first six female operators), though Lovelace’s “program” was really more of a “trace of the machine’s expected operation” through each step of the calculation23—yet another example of ahistorical labeling, which, despite its progressive intentions, distorts Lovelace’s actual accomplishments. Despite not having influence on later computer designers, we still celebrate Babbage and Lovelace as “firsts.” Their significance must be located elsewhere.

Maybe we simply cannot get away from the question of “firsts.” Although firsts may be uninteresting for professional historians, who understand how they can mislead and reduce complex reality to oversimplified soundbites, as an institution whose primary audience is the general public, the Computer History Museum cannot avoid “firsts,” though we can try to minimize and complicate the question. Of course it is important to get the facts right, set the record straight, and acknowledge the contributions of the actual pioneers, as in the case of email.24 For early computers, we can identify multiple, evolutionary firsts, and with each first comes a qualifier, some adjective to add to an ever proliferating list. True “firsts” are complicated and contingent, ever only partially correct, and, of course, dependent on the definitions used by different communities. False “firsts” are easy to identify—a singular, simple narrative of heroic invention, especially when dependent on a definition accepted by no one else other than the purported inventor. Just because a question has a complicated answer, with different points of view and manipulable by language does not mean that suddenly anything goes and that all claims are equally valid. Bad history makes false claims about firsts. Good history makes true claims about firsts. Great history, however, doesn’t primarily concern itself with firsts at all (though it may necessarily deal with them as part of the subject matter), but redirects us to ask deeper, more meaningful questions. Great history, like the work of Tom Haigh, Mark Priestley, and Crispin Rope, goes beyond the baseline of facts, the high-school textbook version, into a whole new realm of interpretation. The trick for us at the Computer History Museum is to do this for the general public. As Len Shustek mentions, this is no easy task. But the answer to “Why is this significant?” can’t just stop with “it was first.” “First” is, after all, only the beginning.